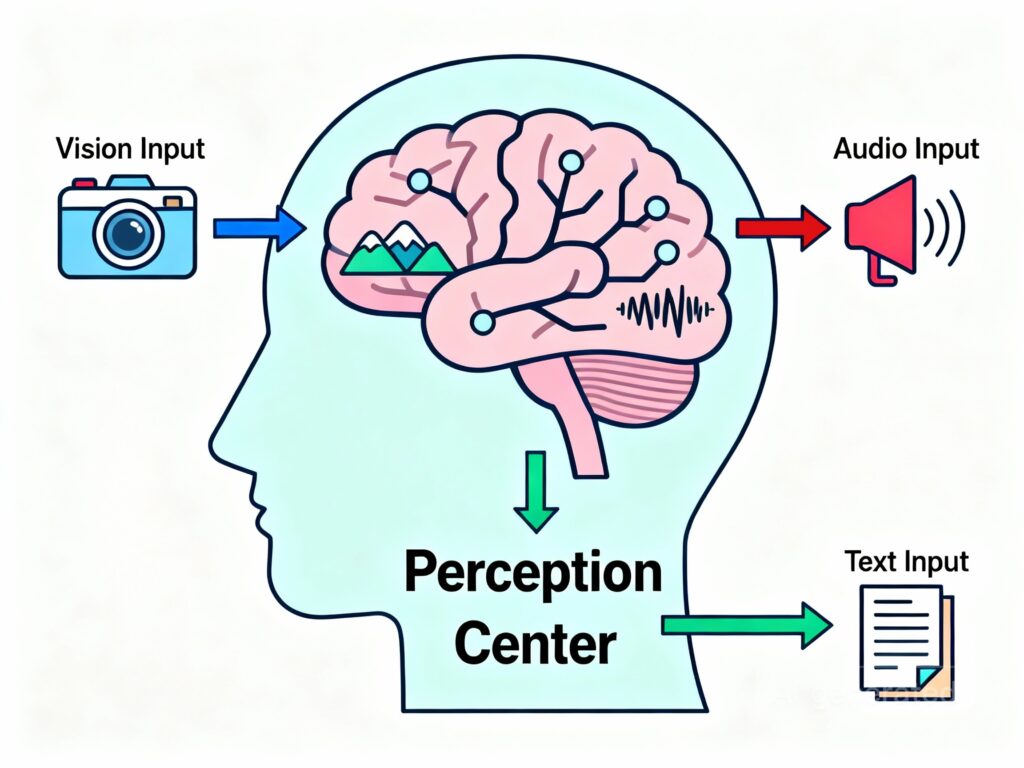

Perception refers to how an intelligent system observes, senses, understands, and interprets its environment in a meaningful manner.

For example, humans use their eyes, ears, touch, and mind to make sense of their surroundings. In contrast, AGI uses cameras, microphones, sensors, patterns, and reasoning to understand what is happening around it. not just as raw data, but as real-world context.

Why Perception Matters in AGI?

A normal AI system can process information, but it usually does not know what that information truly means.

For example, if a normal AI sees a red traffic signal, it just recognizes the color red. But AGI understands in deep so that it can do the following things:

- A red signal means stop

- Vehicles must pause for safety

- Crossing now could be dangerous

How Perception Works in AGI?

AGI perception has two major steps:

- Sensing the Environment

- Interpreting the Meaning

1) Sensing the Environment: This means AGI collects information from the cameras, microphones, sensors (temperature, distance, movement), text and data inputs. This process is similar to how our eyes and ears work.

2) Interpreting the Meaning: Now the system uses that data for logic understanding, pattern recognition, memory, and context analysis to represent the data.

This means AGI perception is not only seeing and gearing, but understanding the world with reason.

How Perception Is useful In Real-Life

Perception is one of the most important points in AGI because it allows a machine to understand the real-world things of humans, instead of just following patterns.

When AGI can see, hear, sense, and interpret situations correctly, it becomes capable of making intelligent decisions, solving problems, and adapting to new environments.

Here are some real-life examples where AGI perception represent valuable technology:

1. Self-Driving Cars

A normal AI can only recognize objects. For example, it can tell that something is a car, tree, or person on the road. But AGI with perception does more than recognition because it can understand the situation, not just what is visible.

Let’s understand some examples:

1) If a child is standing near the road, AGI understands that the child might suddenly start running, so it will slow the car down like a careful human driver would.

2) If the road is wet or it is raining, AGI understands that the surface is slippery and reduces speed, not because of a rule, but because the situation requires

2) Smart Customer Support With Fallings

Currently, most chatbots read your words and reply without understanding how you are feeling while you type something. But AGI can understand your emotions behind the message.

For example:

- If you type messages fast with mistakes, AGI understands that he is stressed.

- If a person uses short, sharp sentences, AGI understands they are angry or irritated.

- And if you ask the same thing repeatedly, AGI understand they are confused.

After these scenarios, AGI changes its tone and it will reply to you with an emotional tone.

Advantage of Perception In AGI

1. Context Awareness

AGI does not react only to the front of the scenario; instead, it understands the behind the scenes. For example, if someone says “I’m fine” but their tone is sad, AGI understands that something is actually wrong behind their tone.

This type of understanding represents the human brain.

2. Predictive Understanding

AGI can predict the current sense and estimate what will happen next. For example, if a person is walking carelessly near a road, AGI can predict danger and warn them. This method is useful for future risk awareness.

3. Learning Through Experience

Normal AI needs new data for every situation, but AGI learns from real-life interaction, same as we learn from experiences.

So the AGI makes a mistake once, it can improve on its own, without re-training.

Disadvantages & Limitations of Perception in AGI

Now we will learn the essential points of perception because it also creates some challenges.

1. Privacy Concerns

We know that AGI understand emotions, tone, facial expressions, or surroundings, but with this method AGI needs to access our camera, microphones, chat history, and personal patterns. This raises privacy risks if not controlled properly.

2. Misinterpretation Can Happen

Humans are complex because sometimes even humans misunderstand each other, so AGI may sometimes misread emotions, make wrong assumptions, and respond incorrectly. And the one small misunderstanding may create big confusion.

3. High Computational Cost

AGI process multiple senses and context in real-time, so it needs very powerful processors, large memory, and a huge energy supply. This makes our system expensive to run and maintain.

4. Security Risks

Someone can access this data for bad intentions and can emotionally influence people. For example, AGI can create wrong messages, news, or explanations.

Also Learn Some Narrow AI Concepts

- An Introduction of Artificial Intelligence

- How can we use AI for Python?

- How can we write our first AI program?

- Learn AI search techniques

M.Sc. (Information Technology). I explain AI, AGI, Programming and future technologies in simple language. Founder of BoxOfLearn.com.